*** EXECUTIVE SUMMARY ***

*** EXECUTIVE SUMMARY ***

While reviewing Barracuda Network’s Web Security Agent, I identified three security vulnerabilities.

Exploitation of these vulnerabilities allow the disclosure and alteration of local WSA settings, shutting down of the WSA service, and local elevation of privilege allowing execution as SYSTEM.

These issues were initially discovered within 4.3.1.53 of WSA. They were responsibly disclosed in early June 2015 and were fully resolved at the end of September, as part of their 4.4.1 release.

*** WALKTHROUGH ***

I’m purposely leaving this details out of this section for now. Developers interested in evaluating the security posture of their applications will hopefully get something out of it, but there’s no reason to rush with the details until users have had a chance to update away from the vulnerable versions. [UPDATE: As of 03/16/2016, details are now included.]

The premise was simple — get around WSA.

There weren’t any obvious results on Google, exploit-db, etc. for known flaws, so I was on my own.

Interacting with the system tray icon for WSA didn’t reveal a whole lot. I noticed that some actions (like Sync or Ping Service Hosts) seemed to briefly turn WSA off and then back on. It was only for a few seconds at most, though. I needed more.

I found the installation location for WSA, hoping it might have some really obvious weaknesses, but nothing really jumped out at me. There was a configuration tool. I decided to start with that.

Some of the applications, including the configuration tool were .NET assemblies. That meant I would be able to utilize ILSpy to see what’s going on behind the scenes, assuming the code hadn’t been obfuscated. Amazingly enough, it wasn’t. The decompiled code was nice and readable.

Immediately, I spotted something. The configuration tool was comparing the password entered by the user matched the value from its settings. Score! If the application was reading the password, that meant I could too.

Loading up the configuration tool and then Visual Studio, both with elevated rights, I was able to attach to the configuration tool’s process. This way, when I tried submitting a password to the tool, I was able to see the password it was being compared against. I then re-ran the configuration tool, entered the password I had just found, and was in. I now had the ability to view and change settings.

It was a start… but requiring admin rights isn’t exactly a ‘bypass’. I wanted to see just how far I could take it.

Thus, the first vulnerability was discovered…

WSA service has decryptable config that is readable by local users (BNSEC-6054)

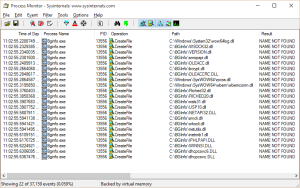

Using ILSpy, I was able to find the encrypted .INI file that WSA kept all of the settings in. I took a brief look at the library it used, pwe.dll, to figure out how it was encrypting/decrypting the file’s contents. It wasn’t a .NET assembly, so my progress with that didn’t get very far. Thankfully, I realized there was an even easier way…I could just call pwe.dll directly, the same way the WSA executables did.

As a first attempt, I read the file directly through some proof-of-concept code in C#, called the library, and got back a string with all of the settings. Awesome.

Of primary interest, of course, was the configuration password. Given how often users/organizations reuse their passwords, knowing the WSA password could potentially give an attacker leverage against other systems or applications.

Having a list of whitelisted applications was also handy. That provided my first way around WSA. If ‘example.exe’ was whitelisted, I could simply rename my browser, malicious code, or whatever to match that name and WSA wouldn’t try to filter or monitor it. Knowing the IP ranges and domains excluded from filtering could be advantageous, too.

Now that I had a way around WSA if I wanted, I decided to make it harder on myself… Rather than just skirting around the filter, I wanted to see if I could actually mess with it.

That led to my second vulnerability finding….

WSA service allows for arbitrary local reconfiguration at net.tcp://127.0.0.1:32323/ (BNSEC-6053, later absorbed into BNSEC-6147)

ILSpy made things incredibly easy. The service had a listener on 127.0.0.1 and there didn’t seem to be any authentication required. The WSAMonitor app, which runs in the system tray, provided all of the information I needed to take advantage of the listener.

I wrote a bit more code and called net.tcp://127.0.0.1:32323/StopService. The service stopped. Or I guess I should say that the filtering stopped. The windows service was still running. I ultimately had to verify that the stop occurred by looking at log entries in the WSA.log file. I also was able to verify it through a website provided by Barracuda that shows whether or not WSA is filtering traffic. The agent’s icon in the system tray remained unchanged, though, as if it was still connected. This meant WSA could be disabled without the user or an admin noticing.

I also eventually wrote calls to GetSettings and SetSettings, allowing me to more easily read and alter the local WSA settings. Any changes would be reset by the next policy sync, but it gave enough of a window to make the changes I wanted. Changing the password was fun, but I also realized that changing how often service hosts get evaluated helped alleviate some of the issues I had with the program. Setting the interval to -1 disabled the automatic pinging of the hosts, which normally was happening every five minutes.

As much fun as I had trying to find ways around WSA, I knew that if I could find these methods, the ‘bad guys’ could, too.

Though Barracuda has a pretty nice bug bounty program, through BugCrowd, apparently WSA isn’t within its scope. Ultimately, I ended up submitting the issue to CERT’s CVE system and also sent the details via encrypted emails to the Barracuda security team.

A few days after my submission, I took one last look at the WSA code, looking for anything I might have missed.

That’s when I discovered the third vulnerability…

WSA local EoP to SYSTEM via net.tcp://127.0.0.1:32323/Update (BNSEC-6147)

This one was really straight-forward. I think I had glossed by it initially because I was simply focused on evading WSA, rather than exploiting it.

The danger of this routine is that it was too trusting. It accepted a filename and arguments and would execute the process as the service (which runs as SYSTEM). Not good.

As soon as I found it, I reported it the same way as the others. Barracuda was quick to acknowledge the issue and provided a reasonable timeline that it would get fixed.

The fix for this was simple enough. Just remove all of the parameters, so calling Update can only do one thing — run the update.

*** OVERALL EXPERIENCE ***

Throughout the entire process, I couldn’t have asked for a better contact at Barracuda than Justin Kelly. He and the rest of the BNSEC team did a great job keeping me in the loop. Justin provided the perfect blend of professionalism and casualness. It was clear that they were taking things seriously on their end, but I never got the feeling that they were going to go Oracle-style with it with legal threats for picking apart their product a bit. I’m looking forward to working with them more in the future.

In business, it generally doesn’t make sense to reinvent the wheel. Why spend time and resources developing something that already exists commercially?

In business, it generally doesn’t make sense to reinvent the wheel. Why spend time and resources developing something that already exists commercially? *** EXECUTIVE SUMMARY ***

*** EXECUTIVE SUMMARY ***

Oracle’s CSO, Mary Ann Davidson, provided a wonderful of example of how not to handle having your products reverse engineered.

Oracle’s CSO, Mary Ann Davidson, provided a wonderful of example of how not to handle having your products reverse engineered. Here are five books I’d recommend checking out, if you haven’t already read them.

Here are five books I’d recommend checking out, if you haven’t already read them.